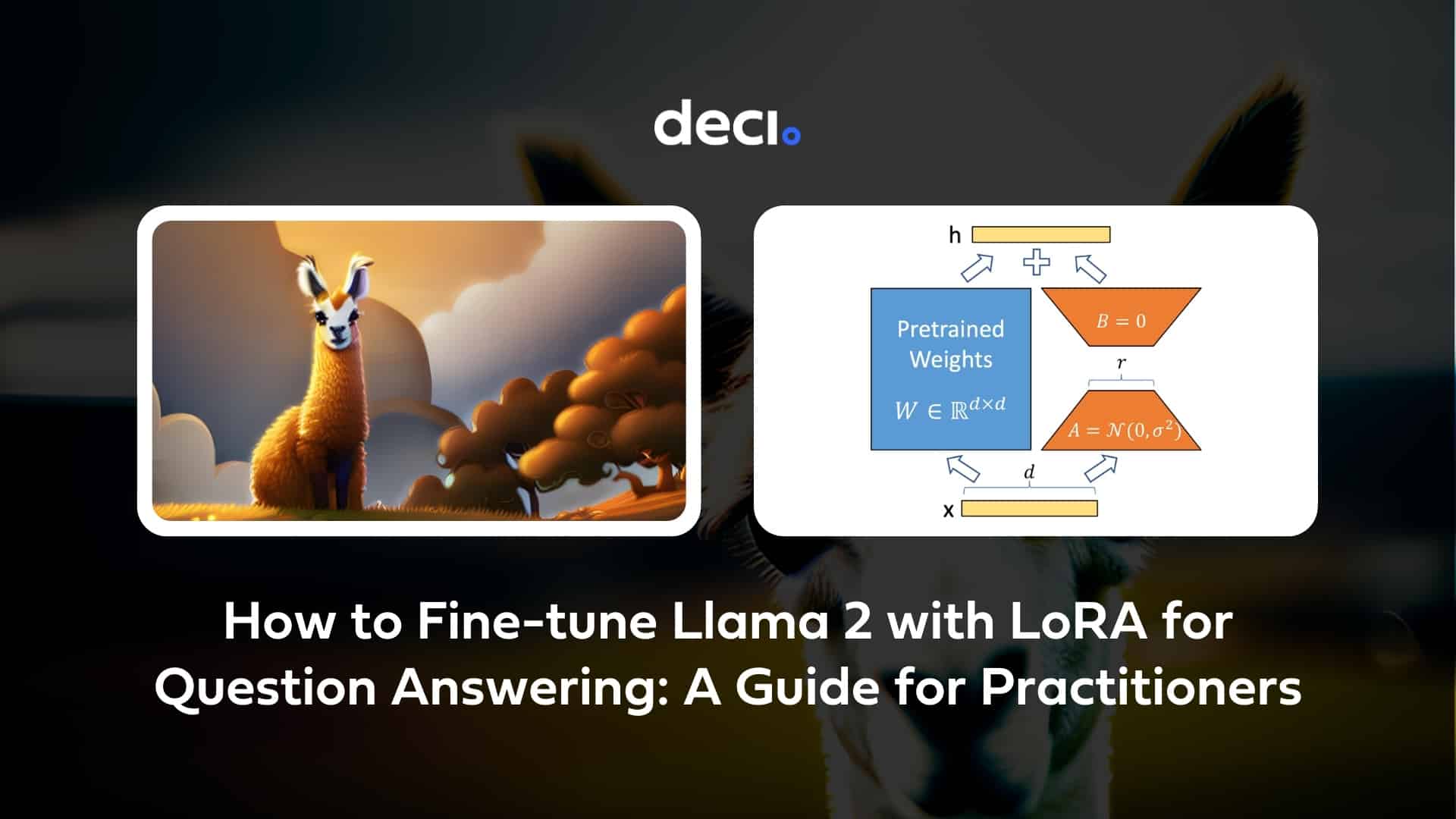

How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide for Practitioners

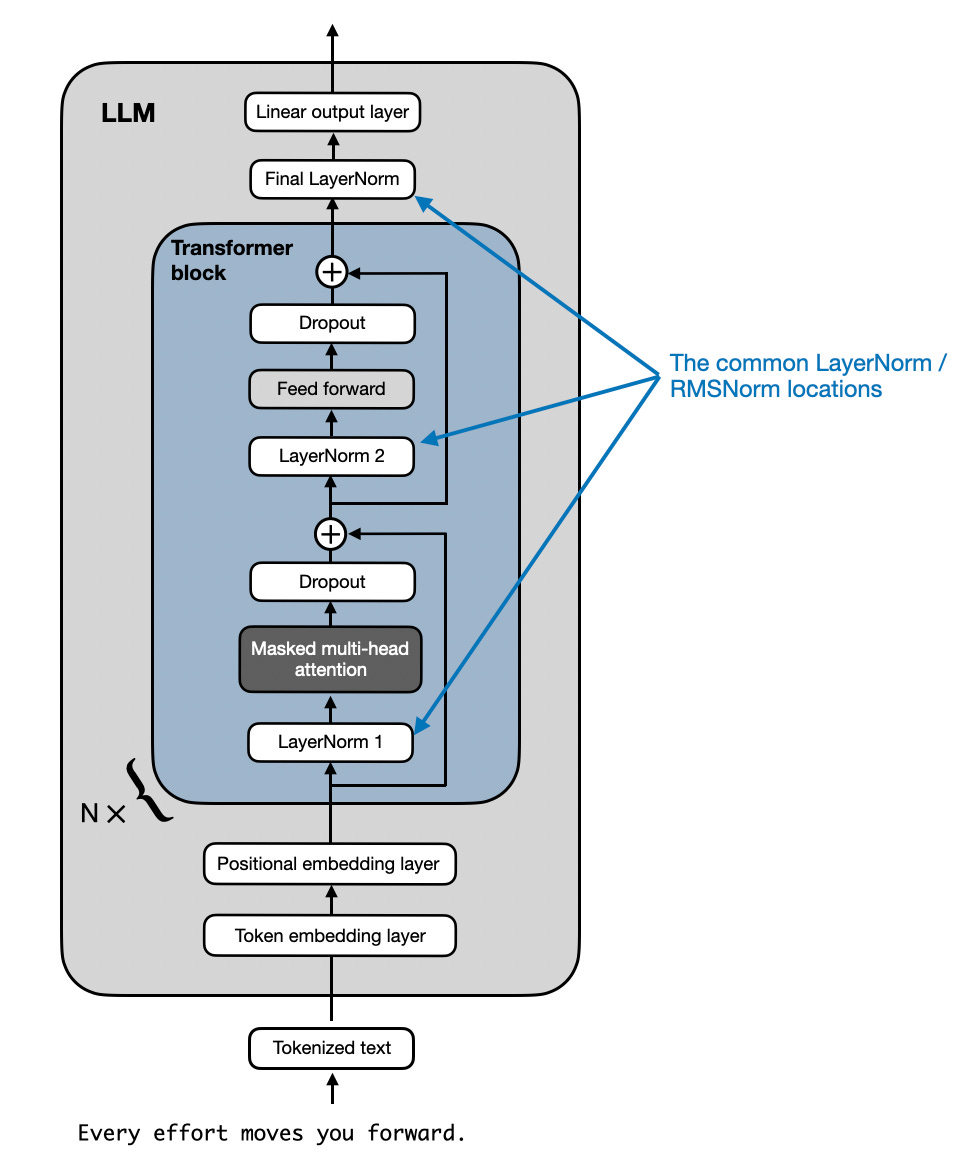

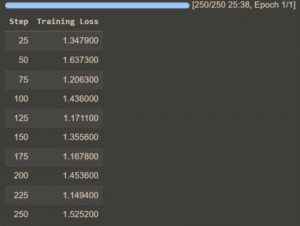

Learn how to fine-tune Llama 2 with LoRA (Low Rank Adaptation) for question answering. This guide will walk you through prerequisites and environment setup, setting up the model and tokenizer, and quantization configuration.

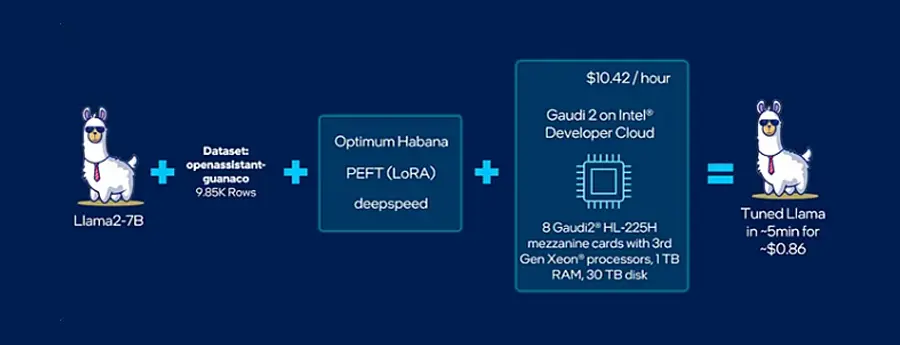

Llama2 Fine-Tuning with Low-Rank Adaptations (LoRA) on Intel

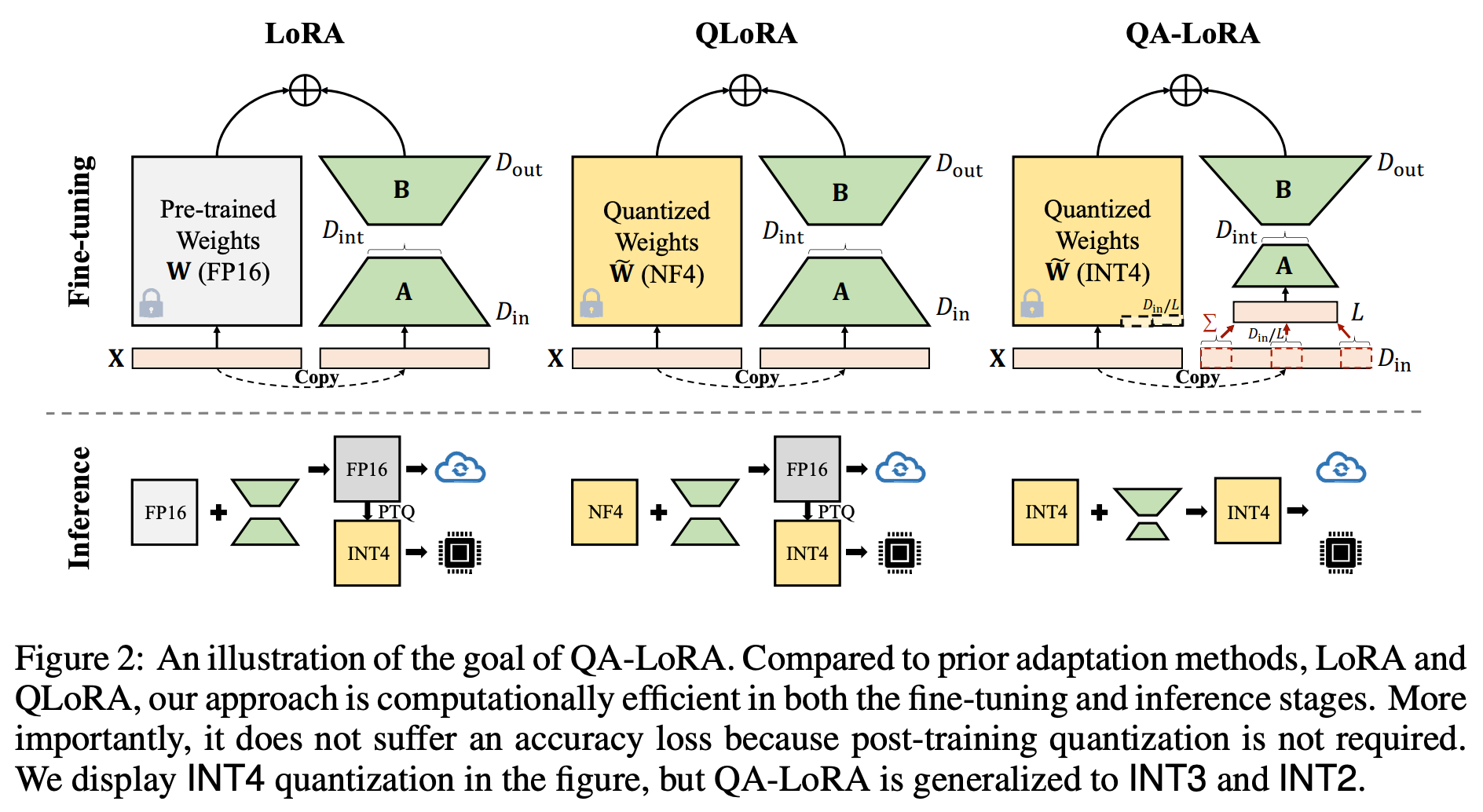

Research Papers in February 2024: A LoRA Successor, Small

How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide

Fine tuning a Question Answering model using SQuAD and BERT

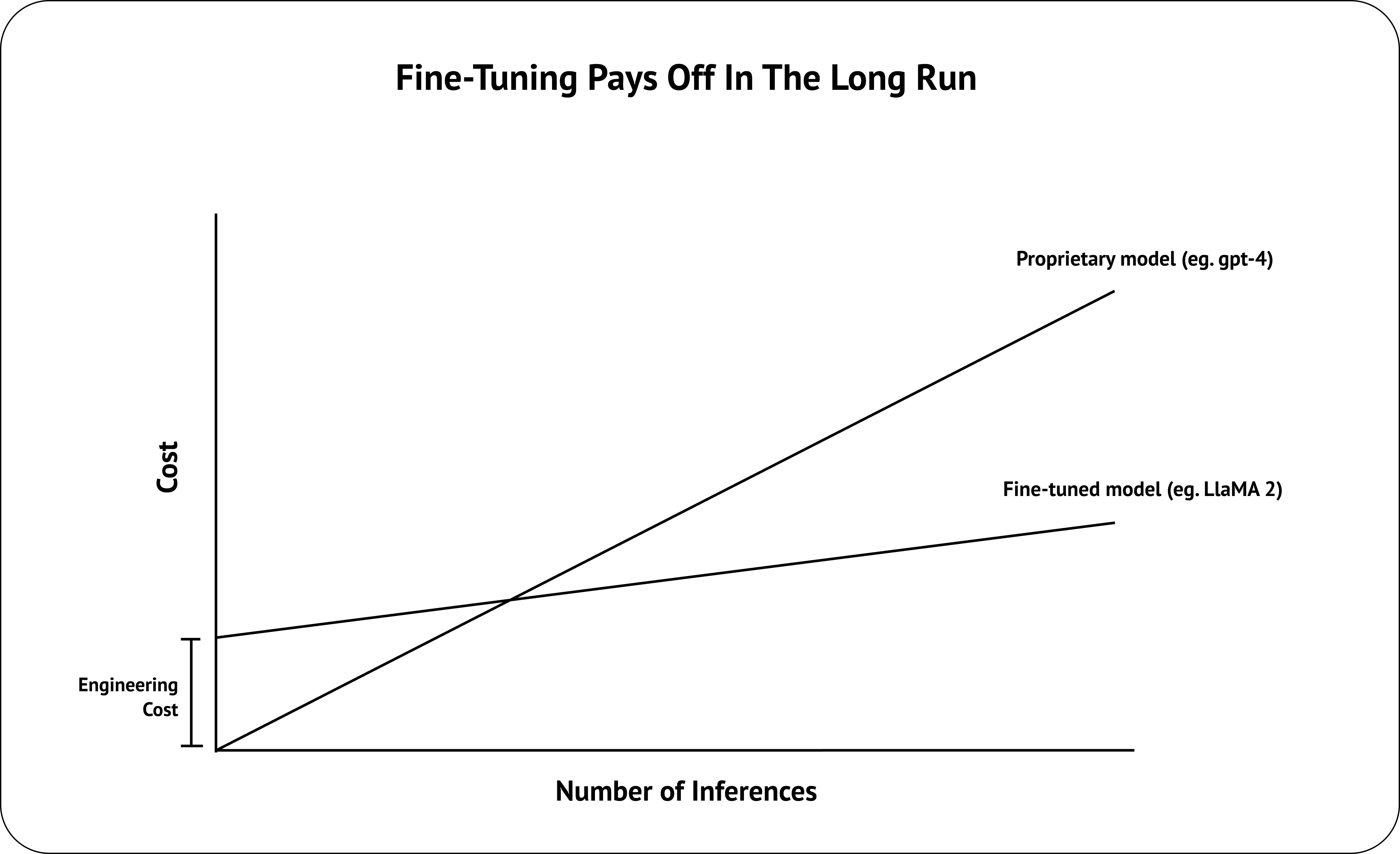

The Ultimate Guide to Fine-Tune LLaMA 2, With LLM Evaluations

Easily Train a Specialized LLM: PEFT, LoRA, QLoRA, LLaMA-Adapter

Fine-tune Llama 2 for text generation on SageMaker

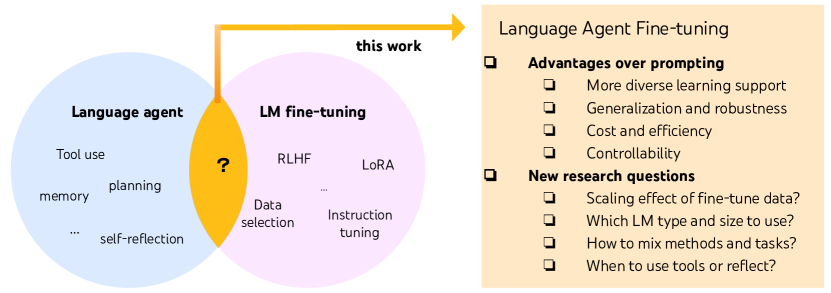

2310.05915] FireAct: Toward Language Agent Fine-tuning

How to Generate Instruction Datasets from Any Documents for LLM

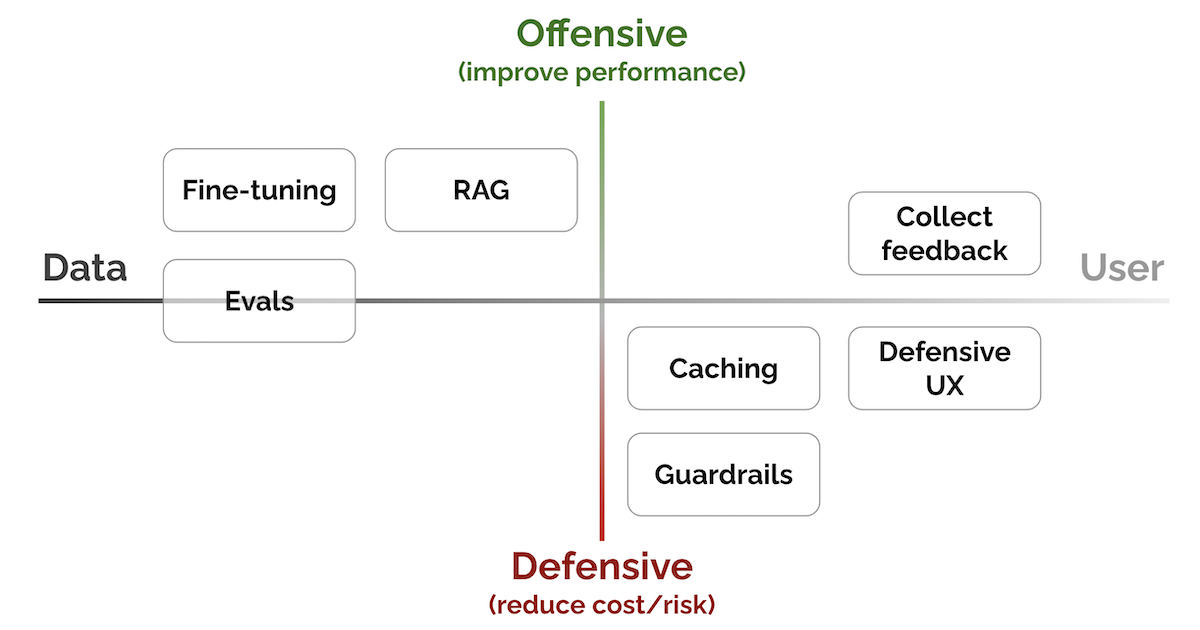

Patterns for Building LLM-based Systems & Products

Sandi Bezjak on LinkedIn: Google DeepMind Introduces Two Unique Machine Learning Models, Hawk And…

Fine-tune Llama 2 for text generation on SageMaker

Webinar: How to Fine-Tune LLMs with QLoRA

Easily Train a Specialized LLM: PEFT, LoRA, QLoRA, LLaMA-Adapter

Supervised Fine-tuning: customizing LLMs