Fitting AI models in your pocket with quantization - Stack Overflow

How to make your AI model smaller while keeping the same performance? ⚡ ✂️, by Mohamed Amine Dhiab

Beating GPT-4 with Open Source LLMs — with Michael Royzen of Phind

Stack overflow podcasts

deep learning - QAT output nodes for Quantized Model got the same min max range - Stack Overflow

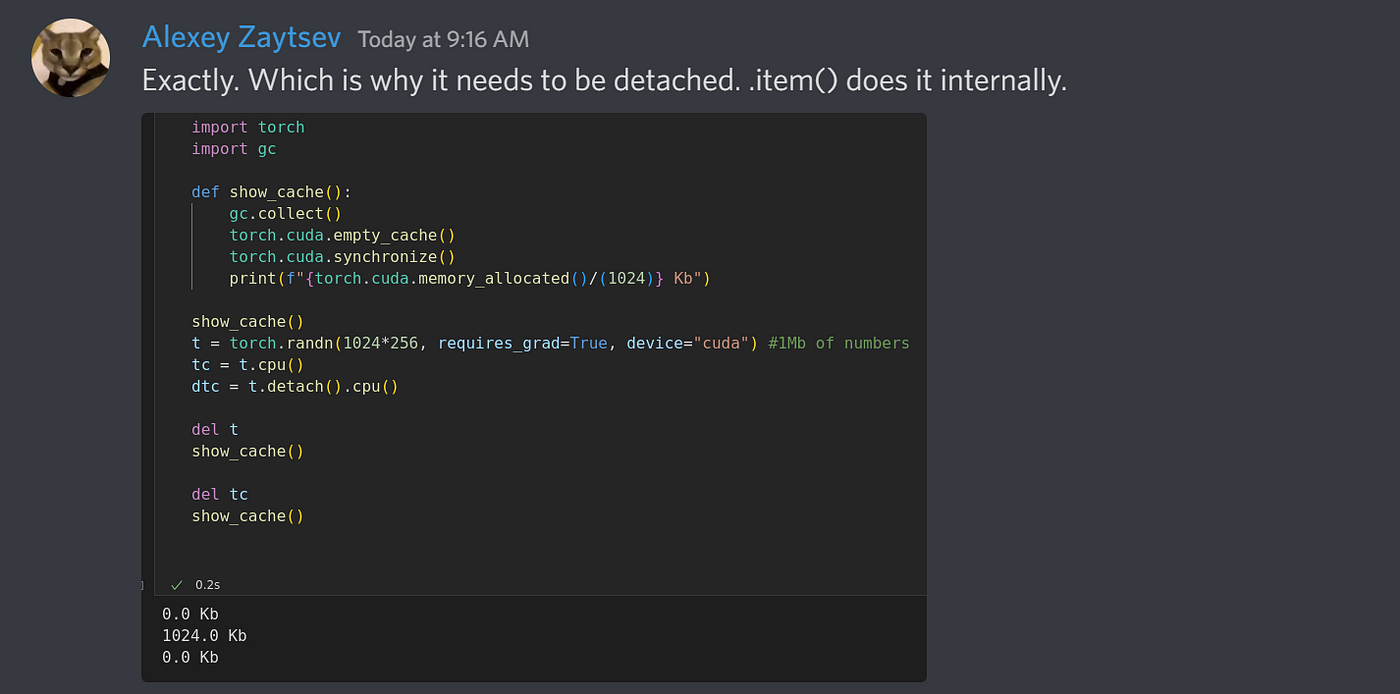

Solving the “RuntimeError: CUDA Out of memory” error, by Nitin Kishore

Navigating the Intricacies of LLM Inference & Serving - Gradient Flow

What is your experience with artificial intelligence, and can you

The New Era of Efficient LLM Deployment - Gradient Flow

Running LLMs using BigDL-LLM on Intel Laptops and GPUs – Silicon Valley Engineering Council

Introduction to AI Model Quantization Formats

Introduction to AI Model Quantization Formats, by Gen. David L.

Can you work on conversational AI at home? - Quora

Andrew Jinguji on LinkedIn: Fitting AI models in your pocket with quantization

Bilawal Sidhu on LinkedIn: #generativeai #artificialintelligence