What's in the RedPajama-Data-1T LLM training set

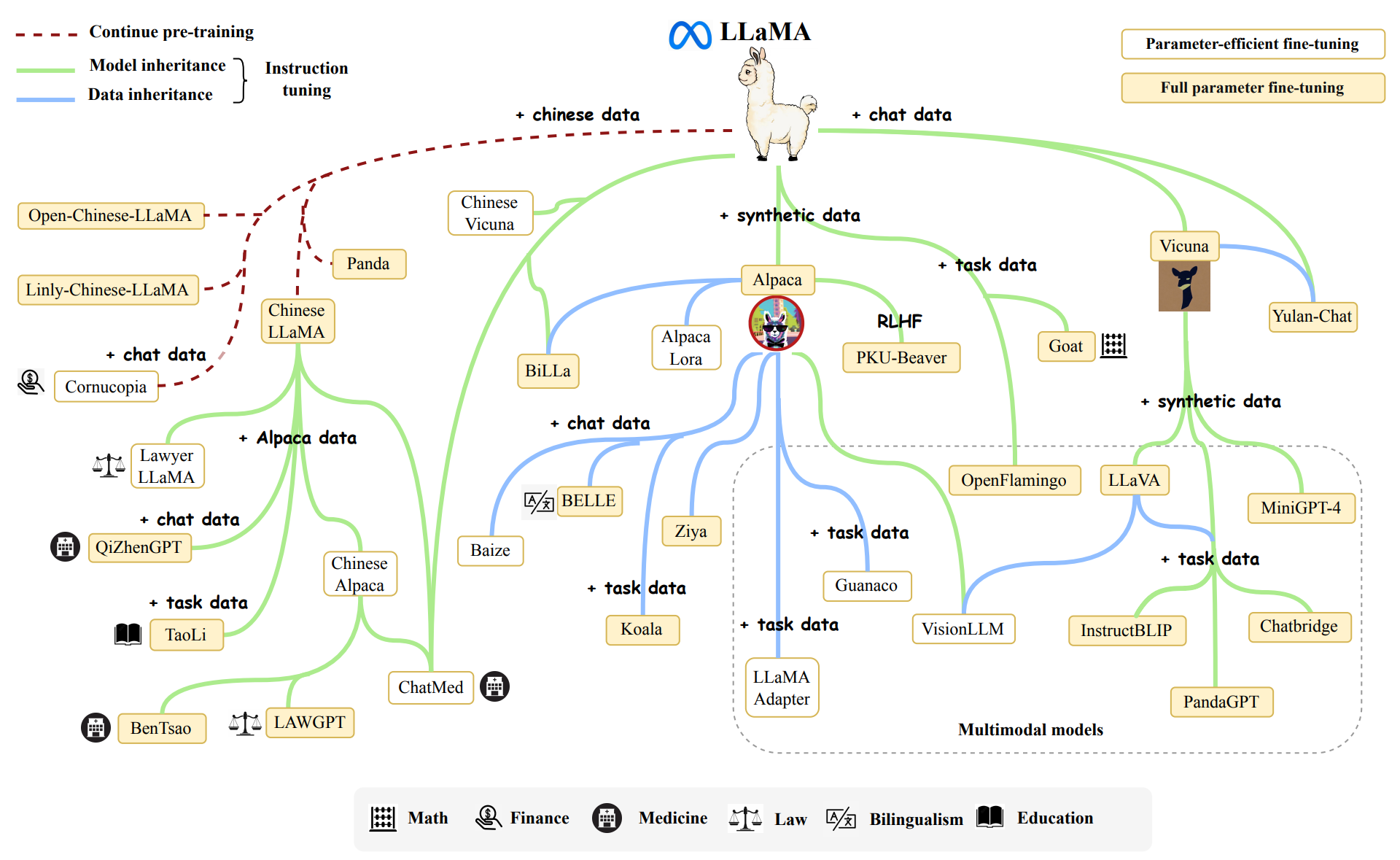

RedPajama is “a project to create leading open-source models, starts by reproducing LLaMA training dataset of over 1.2 trillion tokens”. It’s a collaboration between Together, Ontocord.ai, ETH DS3Lab, Stanford CRFM, …

RedPajama Reproducing LLaMA🦙 Dataset on 1.2 Trillion Tokens

The Practical Guide to LLMs: RedPajama, by Georgian

Fine-Tuning Insights: Using LLMs as Preprocessors to Improve

From ChatGPT to LLaMA to RedPajama: I'm Switching My Interest to

65-Billion-Parameter Large Model Pretraining Accelerated by 38

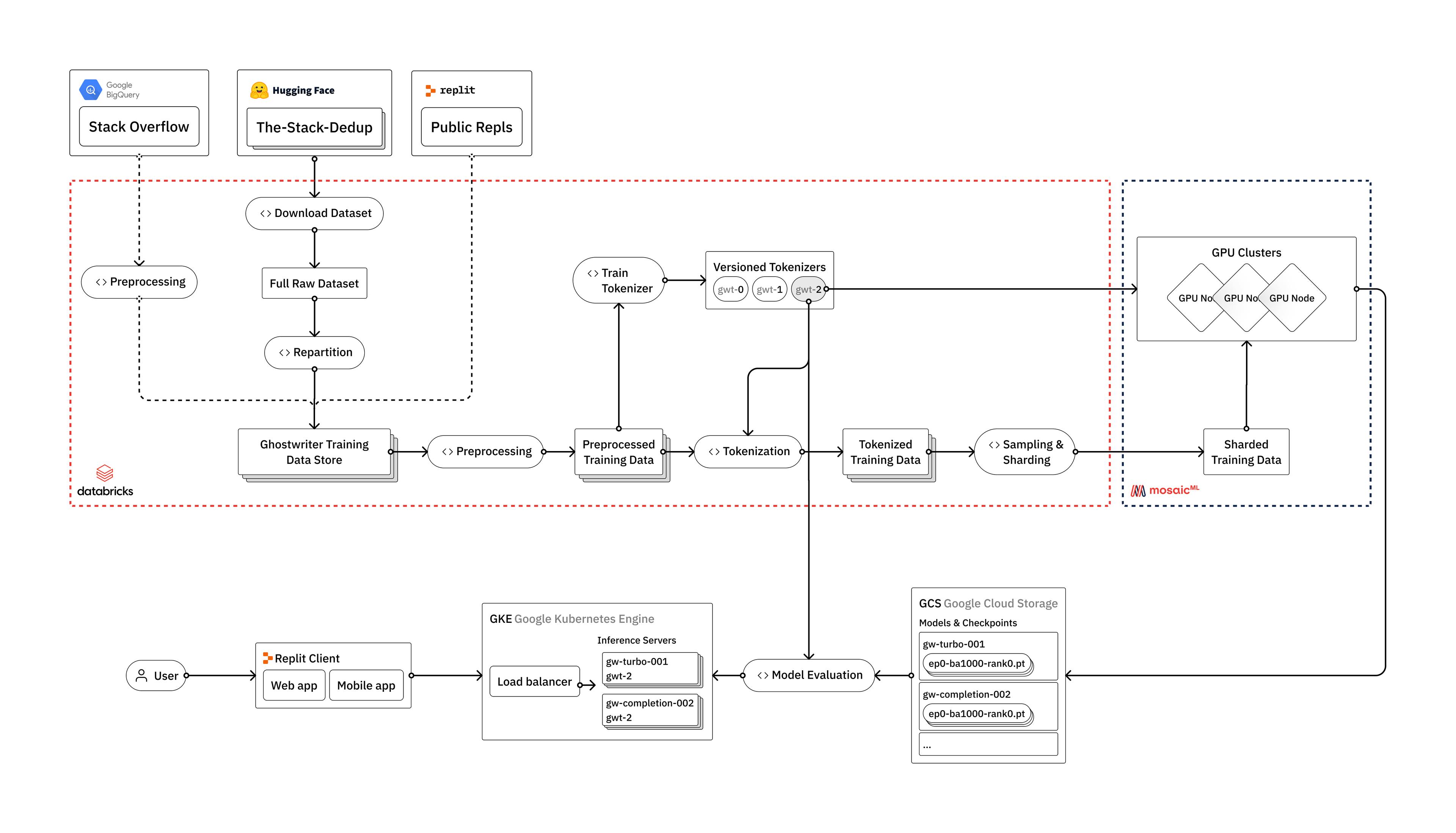

Replit — How to train your own Large Language Models

Web LLM runs the vicuna-7b Large Language Model entirely in your

Data Machina #198 - Data Machina

Fast and cost-effective LLaMA 2 fine-tuning with AWS Trainium

Artificial Intelligence – Page 3 – Data Machina Newsletter – a

Black-Box Detection of Pretraining Data

Together AI Releases RedPajama v2: An Open Dataset with 30

Inside language models (from GPT to Olympus) – Dr Alan D. Thompson