How to Measure FLOP/s for Neural Networks Empirically? – Epoch

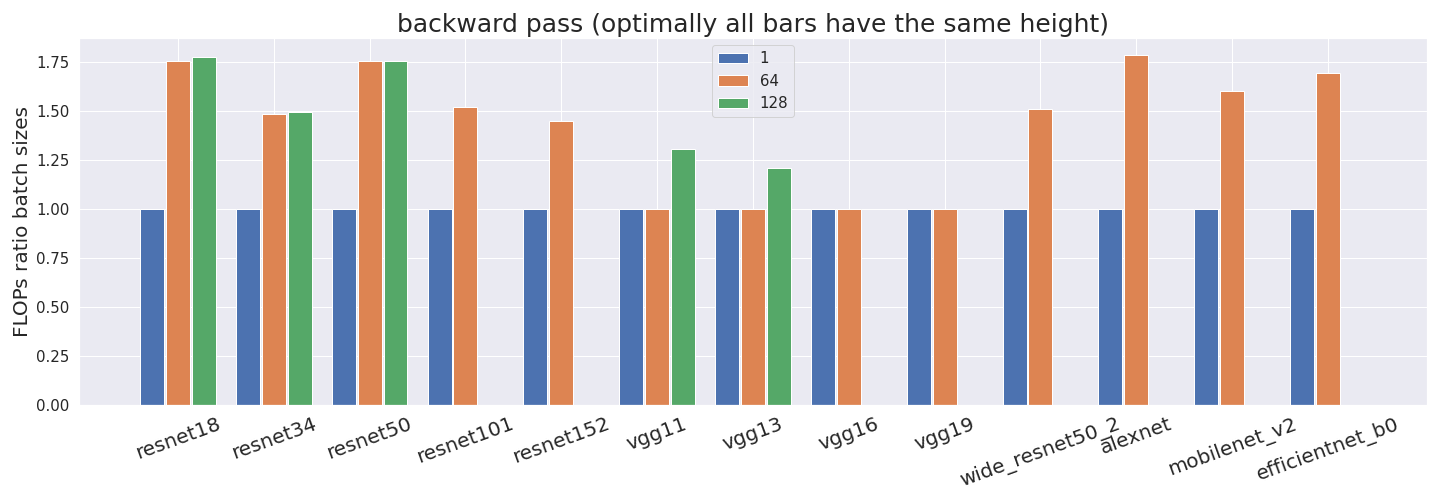

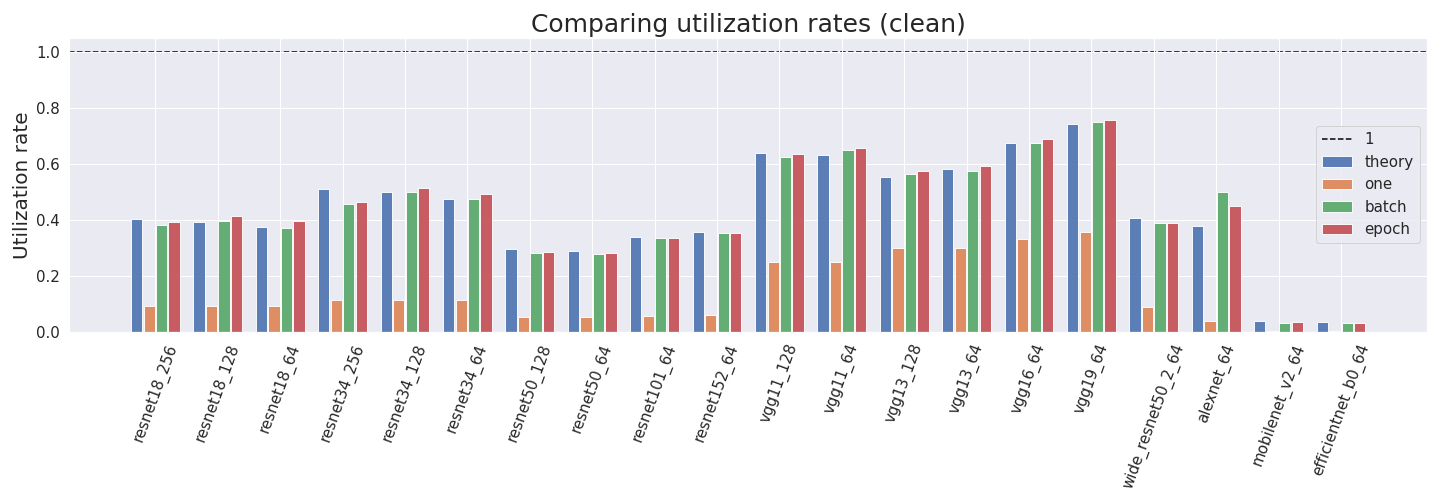

Computing the utilization rate for multiple Neural Network architectures.

The Flip-flop neuron – A memory efficient alternative for solving challenging sequence processing and decision making problems

Missing well-log reconstruction using a sequence self-attention deep-learning framework

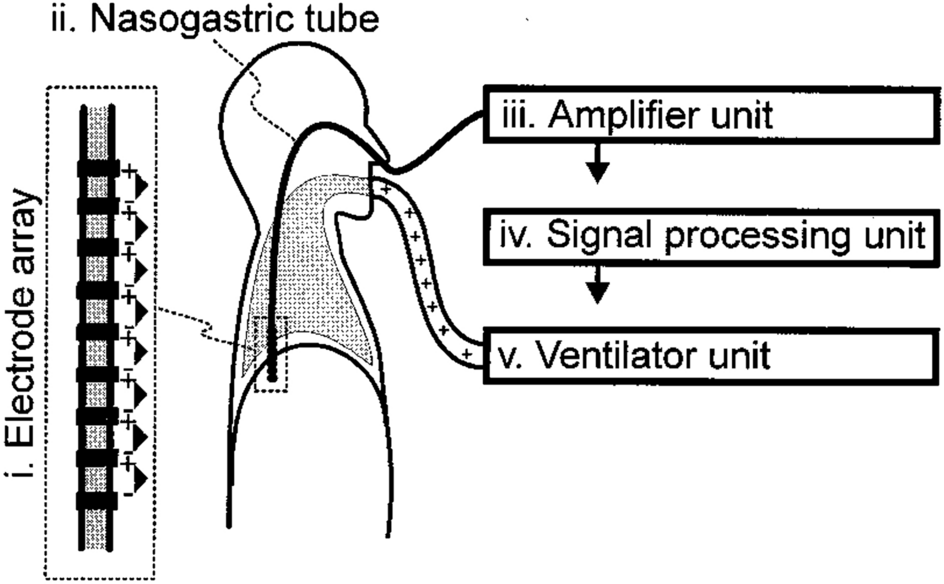

Convolutional neural network-based respiration analysis of electrical activities of the diaphragm

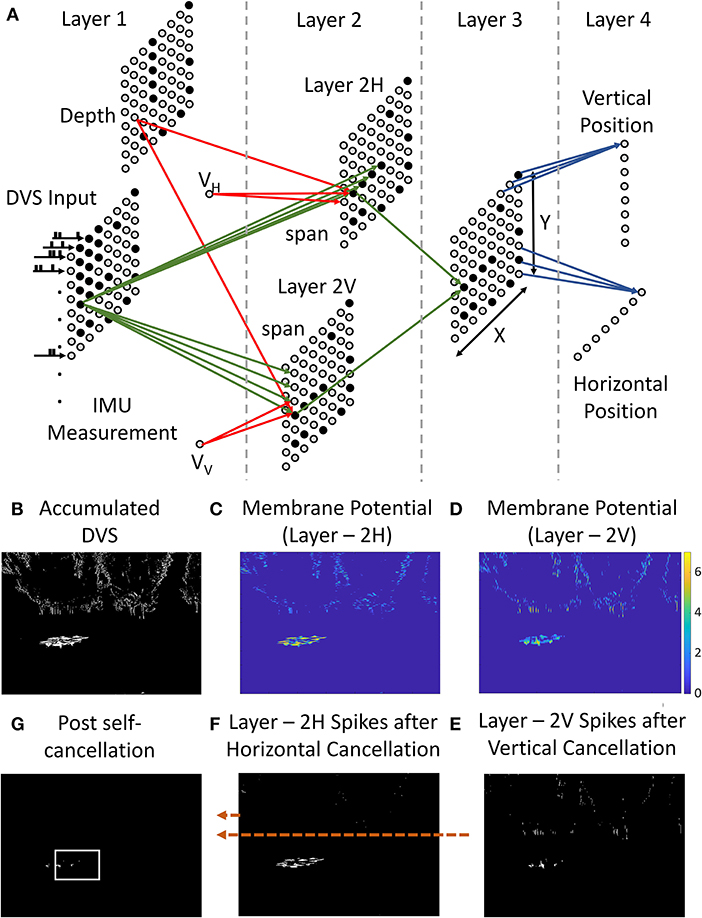

Frontiers Bio-mimetic high-speed target localization with fused frame and event vision for edge application

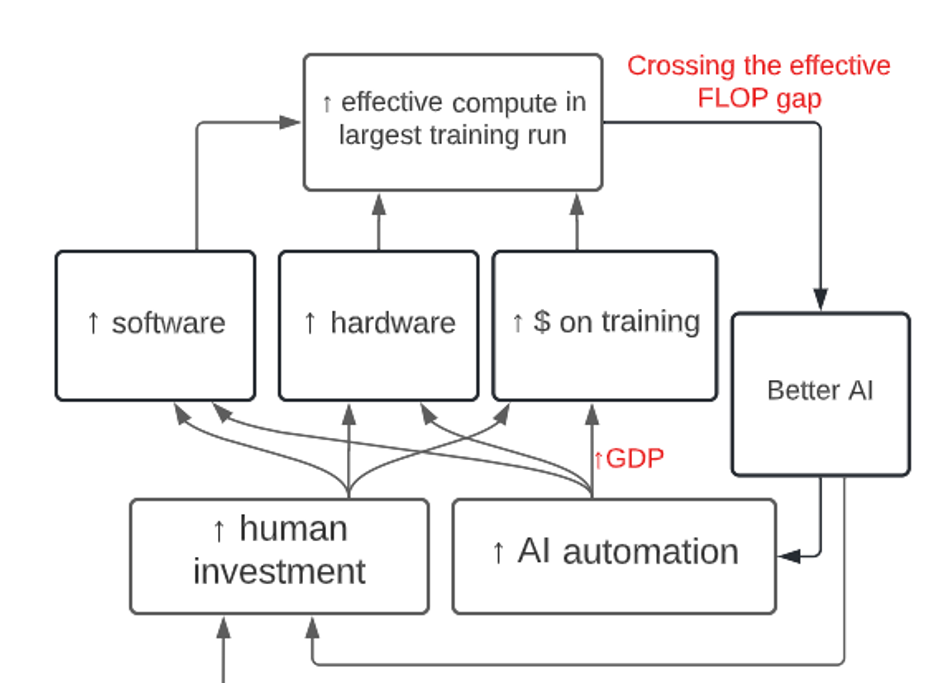

What a Compute-Centric Framework Says About Takeoff Speeds

The FLOPs (floating point operations) and SPE (seconds per epoch) of

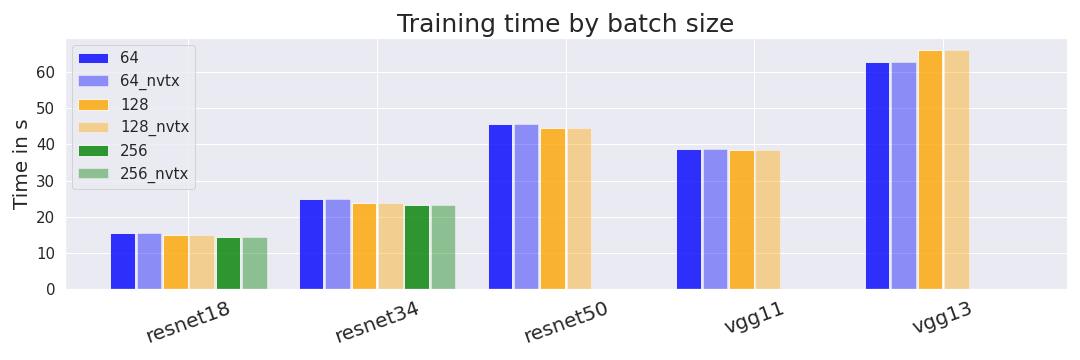

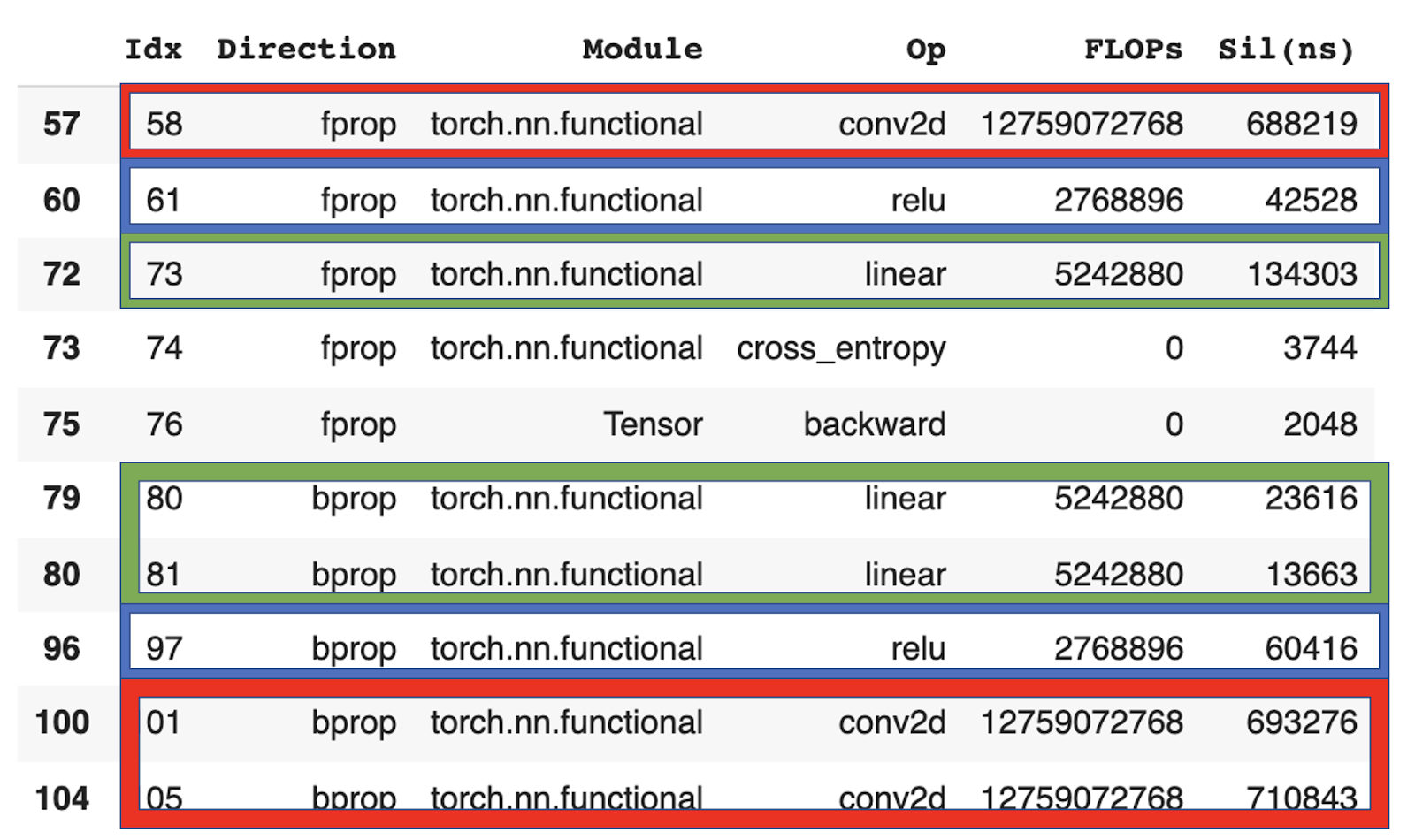

How to Measure FLOP/s for Neural Networks Empirically? – Epoch

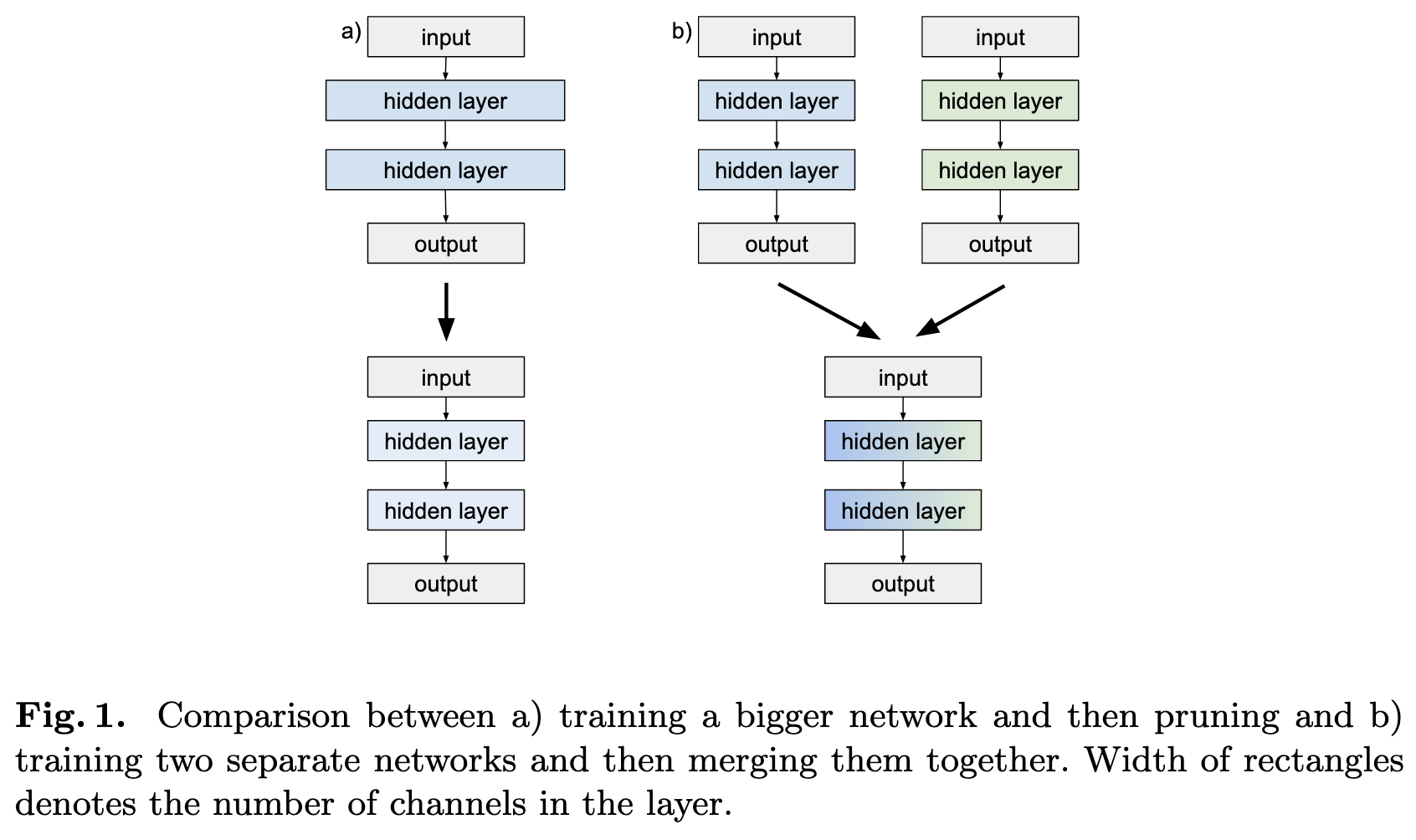

2022-4-24: Merging networks, Wall of MoE papers, Diverse models transfer better

How to measure FLOP/s for Neural Networks empirically? — LessWrong

Calculate Computational Efficiency of Deep Learning Models with FLOPs and MACs - KDnuggets

How to measure FLOP/s for Neural Networks empirically? — LessWrong

Convolutional neural network-based respiration analysis of electrical activities of the diaphragm

Training error with respect to the number of epochs of gradient

How to Measure FLOP/s for Neural Networks Empirically? – Epoch

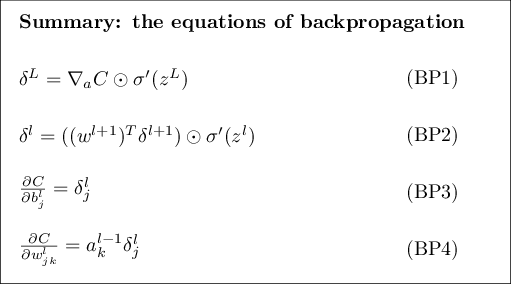

Deep Learning, PDF, Machine Learning