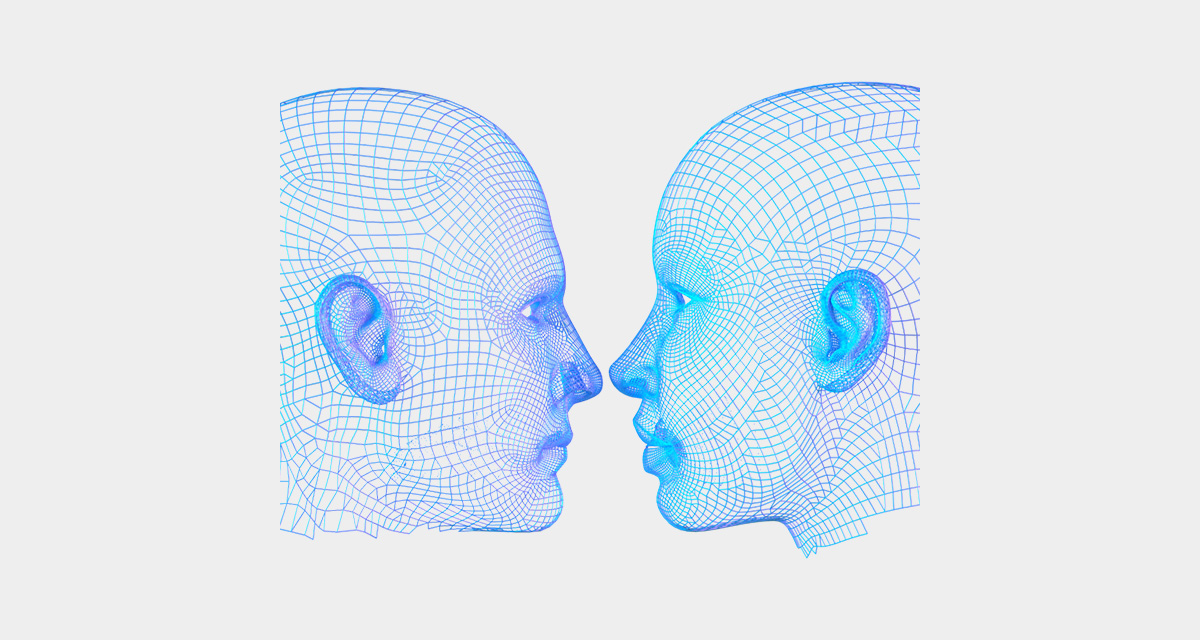

Two-Faced AI Language Models Learn to Hide Deception

(Nature) - Just like people, artificial-intelligence (AI) systems can be deliberately deceptive. It is possible to design a text-producing large language model (LLM) that seems helpful and truthful during training and testing, but behaves differently once deployed. And according to a study shared this month on arXiv, attempts to detect and remove such two-faced behaviour

Algorithms and Terrorism: The Malicious Use of Artificial Intelligence for Terrorist Purposes. by UNICRI Publications - Issuu

Nature Newest - See what's buzzing on Nature in your native language

Against pseudanthropy

Two-Faced AI Language Models Learn to Hide Deception

What Is Generative AI? (A Deep Dive into Its Mechanisms)

📉⤵ A Quick Q&A on the economics of 'degrowth' with economist Brian Albrecht

AITopics AI-Alerts

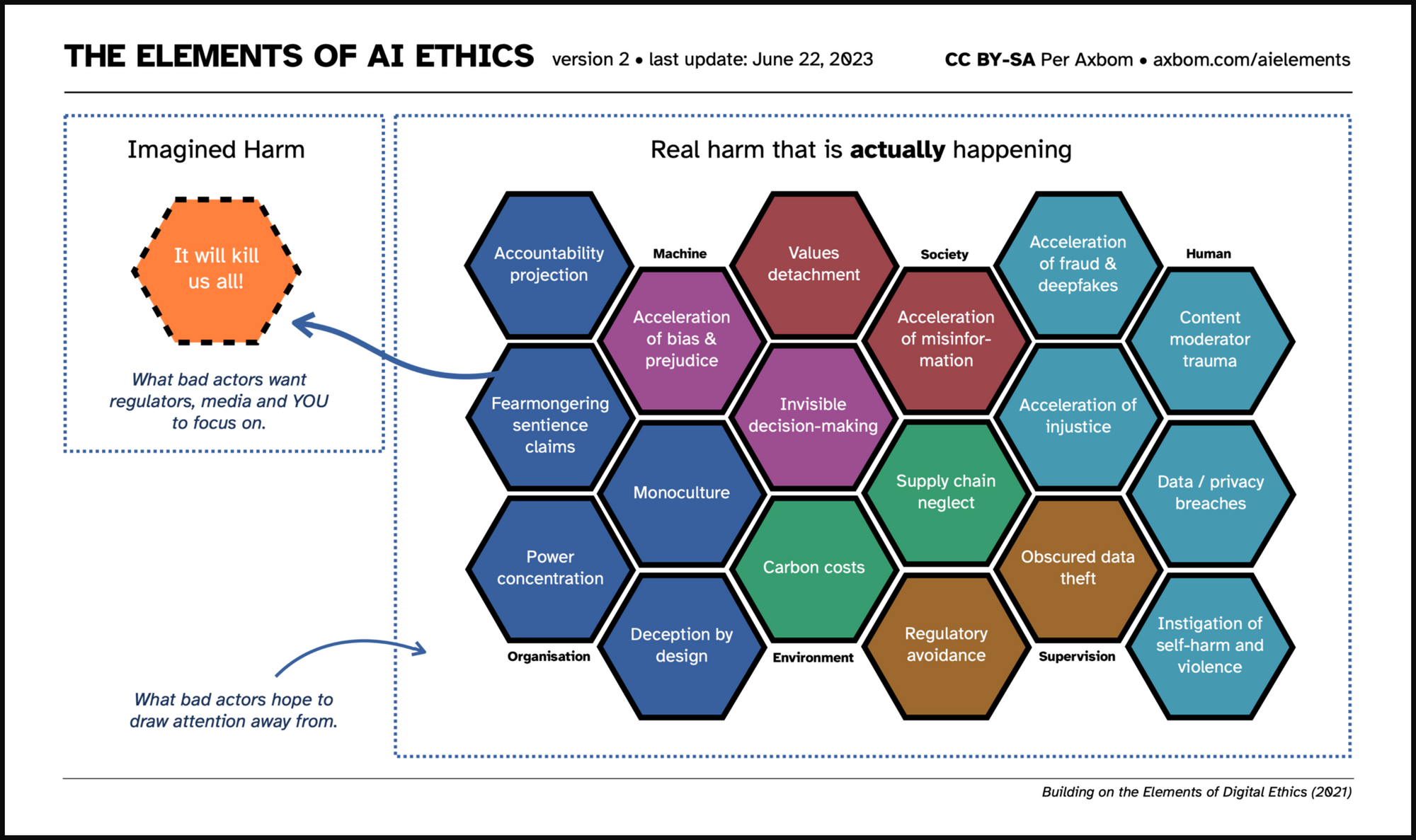

The Elements of AI Ethics

Experts on the Pros and Cons of Algorithms

Jon Abbink on X: Not good news.. Need to be careful with A.I. '' Two-faced AI language models learn to hide deception' / X

Biden Orders US Contractors to Reveal Salary Ranges in Job Ads : r/ChangingAmerica

Aymen Idris on LinkedIn: Two-faced AI language models learn to hide deception

AI Models Can Learn Deceptive Behaviors, Anthropic Researchers Say

What is Generative AI? Everything You Need to Know